Student Research

John Bowker

Since first semester John has been involved in the so-called drummer communication project, in consortium with a few colleagues, which is in effect a networked, real-time improvisation, and communication interface for percussionists. Along the way, John employed his interest in programming to learn about how such a system might operate, and in doing so, really cultivated further interest in programming and problem solving. Interest in animaton, music analysis, cell phone programming, sound, and game development are his motivations for research of shared virtual experiences for musicians. As an aside, he has been building a set of reusable tools for sound applications.

Yushen Han

Yushen is currently working on a "desolo" project as a Research Assistant to Prof. Raphael. The goal of this project is to develop a generic approach for providing music recordings that contain only the accompaniment part, for use by for soloists. The current approach addresses this problem by isolating the accompanying instruments from the soloist, using only a monaural recording. Given explicit knowledge of the music audio in the form of a score match from Prof. Raphael's previous research, music events are modelled using the Short-Time Fourier Transform (STFT) and the contribution of each music event is estimated in the spectrogram. This project is documented in a paper accepted for the ISMIR 2007 conference. Illustrations and audio examples may be found online. Currently, a new approach to synthesize the accompaniment part in real time from sound samples is also being explored.

Yushen is currently working on a "desolo" project as a Research Assistant to Prof. Raphael. The goal of this project is to develop a generic approach for providing music recordings that contain only the accompaniment part, for use by for soloists. The current approach addresses this problem by isolating the accompanying instruments from the soloist, using only a monaural recording. Given explicit knowledge of the music audio in the form of a score match from Prof. Raphael's previous research, music events are modelled using the Short-Time Fourier Transform (STFT) and the contribution of each music event is estimated in the spectrogram. This project is documented in a paper accepted for the ISMIR 2007 conference. Illustrations and audio examples may be found online. Currently, a new approach to synthesize the accompaniment part in real time from sound samples is also being explored.

Yushen is a second year PhD student in the music informatics track. He obtained his bachelor's degree in Electrical Engineering in 2004 from Zhejiang University, Hangzhou, P.R. China.

| Project Page |

Music as Different as Possible - Christy Keele

This nearly completed project is a collaboration with Don Byrd. Given much recent research in musical similarity, this project explores the ideas of difference in music, musical style space, and ways of measuring difference. In the spring of 2007, Christy administered an online survey to 80 participants and had them select the six musical examples they thought were most different from each other out of a total of ten. Although future efforts would likely use a different survey design, this represents one of the first data collections aimed at this particular problem.

Christy is a second-year master's student in music theory. She teaches sophomore written music theory and earned her bachelor's degree in piano performance from the Univ. of Nebraska-Lincoln.

| Project Page |

An Open-Source Phase Vocoder with some Novel Visualizations - Kyung Ae Lim

This project is a phase vocoder implementation that uses multiple, synchronized visual displays to clarify aspects of the involved signal processing techniques.

The program can do standard phase vocoder operations such as time-stretching and pitch-shifting which are based on an analysis and resynthesis method using Short-Term Fourier Transform.

Conventional 2-D displays of digital audio including spectrograms, waveform displays and various frequency domain representations are involved as well as novel 3-D visualizations of frequency domain are adopted to aid users who begin to learn digital signal processing and phase vocoder.

This application is intend to serve as an open-source educational tool in the near future.

This project is a phase vocoder implementation that uses multiple, synchronized visual displays to clarify aspects of the involved signal processing techniques.

The program can do standard phase vocoder operations such as time-stretching and pitch-shifting which are based on an analysis and resynthesis method using Short-Term Fourier Transform.

Conventional 2-D displays of digital audio including spectrograms, waveform displays and various frequency domain representations are involved as well as novel 3-D visualizations of frequency domain are adopted to aid users who begin to learn digital signal processing and phase vocoder.

This application is intend to serve as an open-source educational tool in the near future.

Kyung Ae Lim recently completed her Master of Informatics capstone project, working with Ian Knopke and Chris Raphael.

Her capstone paper is availavle here .

| Capstone Poster |

DJMIRI - Joey Morwick

The goal of the DJMIRI project (a Dynamic Java-based Music Information Retrieval Interface) is to develop a unique interface for existing and future content-based MIR systems that provides a more effective method of querying. DJMIRI will also be designed to allow for plugins which can be written to communicate with any existing MIR system.

The goal of the DJMIRI project (a Dynamic Java-based Music Information Retrieval Interface) is to develop a unique interface for existing and future content-based MIR systems that provides a more effective method of querying. DJMIRI will also be designed to allow for plugins which can be written to communicate with any existing MIR system.

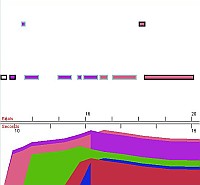

Dynamic queries allow users to persistently update a query (usually through graphical widgets such as sliders) and view rapidly updated feedback based on this query (often in the form of a 2-dimensional plot). DJMIRI will allow for dynamic querying of these systems by accepting continuous user input (notes from an electronic keyboard) and producing an intuitive visualization of each system's response to the user's input as it changes over time. This allows the user to utilize preliminary feed-back to adapt their query as they form it. The visualization will be a graph representing how confident the system is that it has identified the provided musical content as belonging to one song or another. At any given point in time in this graph, the closest preceding notes will have the most influence while other preceding notes will have less influence the further they are away.

Joey Morwick is a Ph.D. student in Computer Science (Informatics) working with Don Byrd.

| DJMIRI Screenshot |

| Another DJMIRI Screenshot |

Polyphonic Pitch Recognition - Eric Nichols

Eric Nichols (M.S., Computer Science, Indiana University; B.S., Mathematics, Montana State University--Bozeman) works in Music Informatics as a Research Assistant for Prof. Chris Raphael. Recent research projects have been in the general area of automatic audio-to-score conversion, such as polyphonic pitch detection and signal segmentation. Other projects have involved distributed musical performance over the Internet and automatic generation of fingerings in piano scores.

Eric Nichols (M.S., Computer Science, Indiana University; B.S., Mathematics, Montana State University--Bozeman) works in Music Informatics as a Research Assistant for Prof. Chris Raphael. Recent research projects have been in the general area of automatic audio-to-score conversion, such as polyphonic pitch detection and signal segmentation. Other projects have involved distributed musical performance over the Internet and automatic generation of fingerings in piano scores.

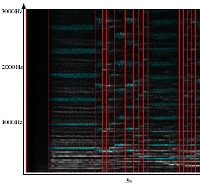

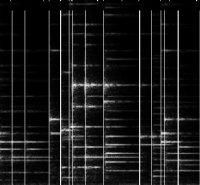

Nichols and Raphael describe a dynamic programming approach to globally optimal audio partitioning in a paper accepted for the ISMIR 2006 conference. The image and associated audio file (wav) illustrate the approximate note boundaries in an audio recording of Chopin's Prelude No. 7, Op. 28 detected by the method described in this paper. Vertical lines in the bottom half of the image correspond to true note boundaries, while those in the top half were computed algorithmically. These detected boundaries are represented by audible clicks in the audio file. The partitions generated here are intended to be used to improve the frequency resolution of Fourier analysis in downstream signal processing; thus the presence of extra partition points is not necessairly problematic.

Mr. Nichols (personal website) is a Ph.D. student in Computer Science and Cognitive Science and a member of the Fluid Analogies Research Group (FARG). In addition to Music Informatics he is interested in models of music cognition. Specifically, he is developing a model of melodic expectation under the supervision of Prof. Douglas Hofstadter of the Center for Research on Concepts and Cognition (CRCC).

Pablo Vanwoerkom

Pablo Vanwoerkom's research interests lie in the area of music creation tools. Currently, together with Ian Knopke and John Bowker, he is working on a Java application that will allow people to initially drum over the web, but can easily be extended to any kind of music that slowly evolves, such as trance and techno music, or improvised jams over a short sequence of chords. He is also interested in other music applications that make use of the Web such as compositional collaboration. Finally, his interests in improvisation draws him towards software that can stimulate in the creative and spontaneous process of improvising.

Pablo Vanwoerkom is on his second year as a Master's student in Music Informatics. He received a B.A. in Computer Science and Mathematics, with a minor in Music from Carroll College in Helena, Montana.

| Personal Page |

Yupeng Gu -- Automatic Piano Concerto Accompaniment

This project is trying to develop an automatic computer system that can serve the role of an orchestra in real time classical piano concerto performances. The system is an extension to Prof. Christopher Raphael's "Music Plus One" accompaniment system. But since the users we want the system to work for are serious pianists, there are several new challenges. First of all, score following -- the listening part of the system -- is generally harder for piano music because 1. the piano audio is usually more complicate than other instruments, 2. we need to handle performance errors which occur more frequently in piano playing. We circumvented 1. by using a reproducing piano that can generate digital information and we build an HMM type of model that tries to determine and interpret imperfect piano performance properly. This part of work is documented in a paper which is accepted in ICMC 2009 (pdf). Secondly, How to anticipate soloist's performance and drive the synthesized accompaniment musically -- the playing part of the system -- is still far from perfect. We currently use a Kalman filter type model as an approach but we are trying to find better solutions for this problem. Both the current listening and playing parts are automatically trainable to specific performer and piece under consideration.

You can find a demo of this project HERE.

Yupeng Gu is a third year PhD student in music informatics. Yupeng is interested in problems related to computer assisted classical music performances.

Pitch Spelling - Gabi Teodoru

"Should this be a C-sharp or a D-flat?" is the type of question our algorithm can answer with over 99% accuracy. Musicians are used to seeing their sheet music complete with the correct spelling; however, most of the electronic-format musical scores available on the Internet do not have any spelling information whatsoever (they just instruct the musician to "play the 73rd piano key"). It is just as hard for a musician to play from an incorrectly spelled score as it is for a reader to make sense out of a sentence like "Eye wood like two show ewe my knew hoarse." Homophone Machine.

The algorithm works by modeling the current key, as well as the scale degree of each single voice independently, within a probabilistic framework, and then finding the most probable spelling. For more details check out the paper here.

Gabi has worked on this together with his advisor

Christopher Raphael.

Tuning Tutor - Gabi Teodoru

This is an ear training program that focuses on the precise tuning of notes, intervals, and chords. Users must mark out-of-tune notes, or tune them to their appropriate frequencies, with different difficulty levels requiring increasing precision. These tasks may be performed under different tuning systems, such as equal-tempered, meantone, or Werkmeister III. In their training, students may use a diagram that displays the frequencies of the sounding pitch and the correct pitch relative to A-440 equal temperament. It is also possible to have the same sonority be presented in two different tuning systems for comparison. The program thus complements classical ear training software with its focus on slightly in-tune or out-of-tune sonorities.

The software is primarily designed for choral conducting students, for

whom fine-tuning sonorities is an everyday occurance. However

orchestral conducting students and instrumentalists can also benefit

from the skills developed by using this program. You can get the

tuning tutor

here.

Gabi has worked on

this together with his advisor

Christopher Raphael

and Jacobs School

of Music conducting professor

John Poole.

Kyung Ae Lim - InTune: A Musician.s Intonation Visualization System

InTune is a free software program which gives visual feedback on intonation for musicians - mainly for monophonic instruments. It has three different views: music score, pitch trace and spectrogram in mouse/keyboard interactive Windows environment. These views are synchronized with one another along with audio. The backbone of the program is a score-following system, on which pitch estimation is implemented. 33 musicians, from second grade children to faculty members at Jacobs School of Music, provided their feedback on this user-centered program.

Kyung Ae has worked on InTune project with Prof. Christopher Raphael. The project was presented at SIGGRAPH 2009 and Sound and Music Computing Conference 2009 (paper). You can download InTune here.

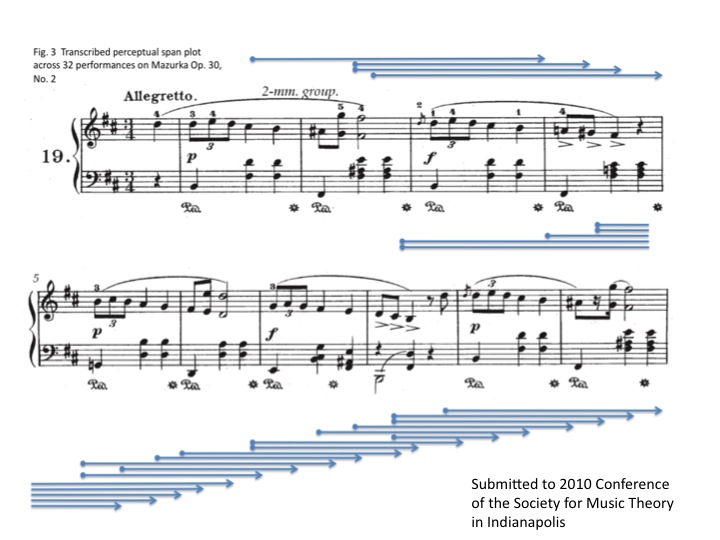

Computational Model to Estimate Expert Pianists' Perceptual Present

An interdisciplinary collaboration between Mitch Ohriner (music theory) and Yushen Han (music informatics)

Recent studies in diverse fields of inquiry, including music philosophy and psychology, lend converging evidence that musical attention of both performers and listeners is primarily focused successively small "chunks" of material rather than larger formal relationships. These chunks are temporally bounded by the perceptual present, We present a novel method of measuring the perceptual present from performance timing data by reframing the question of the duration of the perceptual present to a related inquiry: at a given location in the piece, how many previous beats are sufficient to predict the duration of the current beat? We address this by building and testing probabilistic graphical models that include different ranges of pervious beats in predicting the current. We compute the likelihood for each model with timing data across all performers and perform statistical tests on each pair of those models to exclude those seem to arise by chance. We choose the model that has uncompromised predicting power but incorporates a smaller range of previous beats. A perceptual span plot across 32 performances on Mazurka Op. 30, No. 2 is shown as an example (from our submission to 33th conference of the Society of Music Theory in Indianapolis 2010)

Mitch Ohriner is a PhD student in Music Thoery from Jacobs School of Music, Indiana University Bloomington.